Autonomous vehicles and moral decisions: what do online communities think?

In 2016, researchers at CNRS (members of TSE – Université Toulouse Capitole), MIT, Harvard University and the University of British Columbia launched the “Moral Machine” online platform to ask users about moral dilemmas facing us in the development of autonomous vehicles. The researchers gathered 40 million decisions from millions of web users worldwide. The results show global moral preferences that may guide decision makers and companies in the future. The analysis of this data was published in Nature on October 24, 2018.

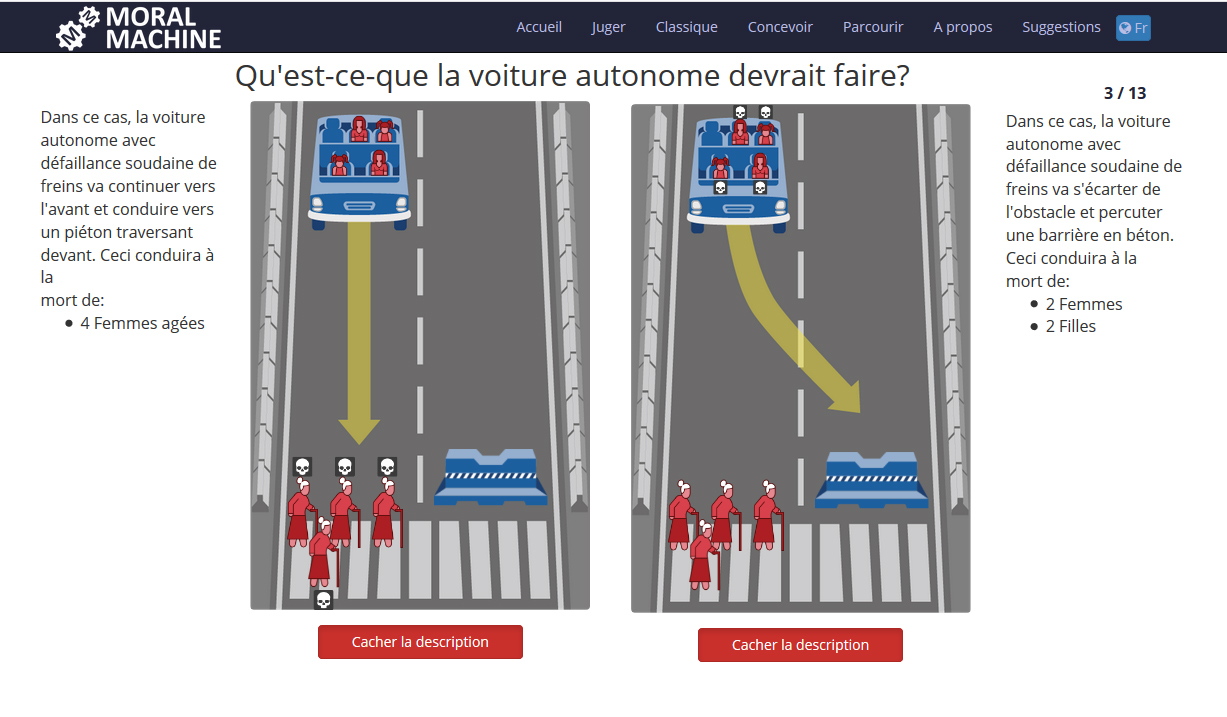

Would you hit a woman with a stroller or hit a wall and possibly kill the four passengers? This is the type of dilemma that autonomous vehicles, and even more those who program them, face. In 2016, a research team launched the Moral Machine platform to ask people to decide the what the “fairest” option was. For 18 months, the researchers gathered the responses to document the moral preferences of these web users from 233 countries and territories worldwide.

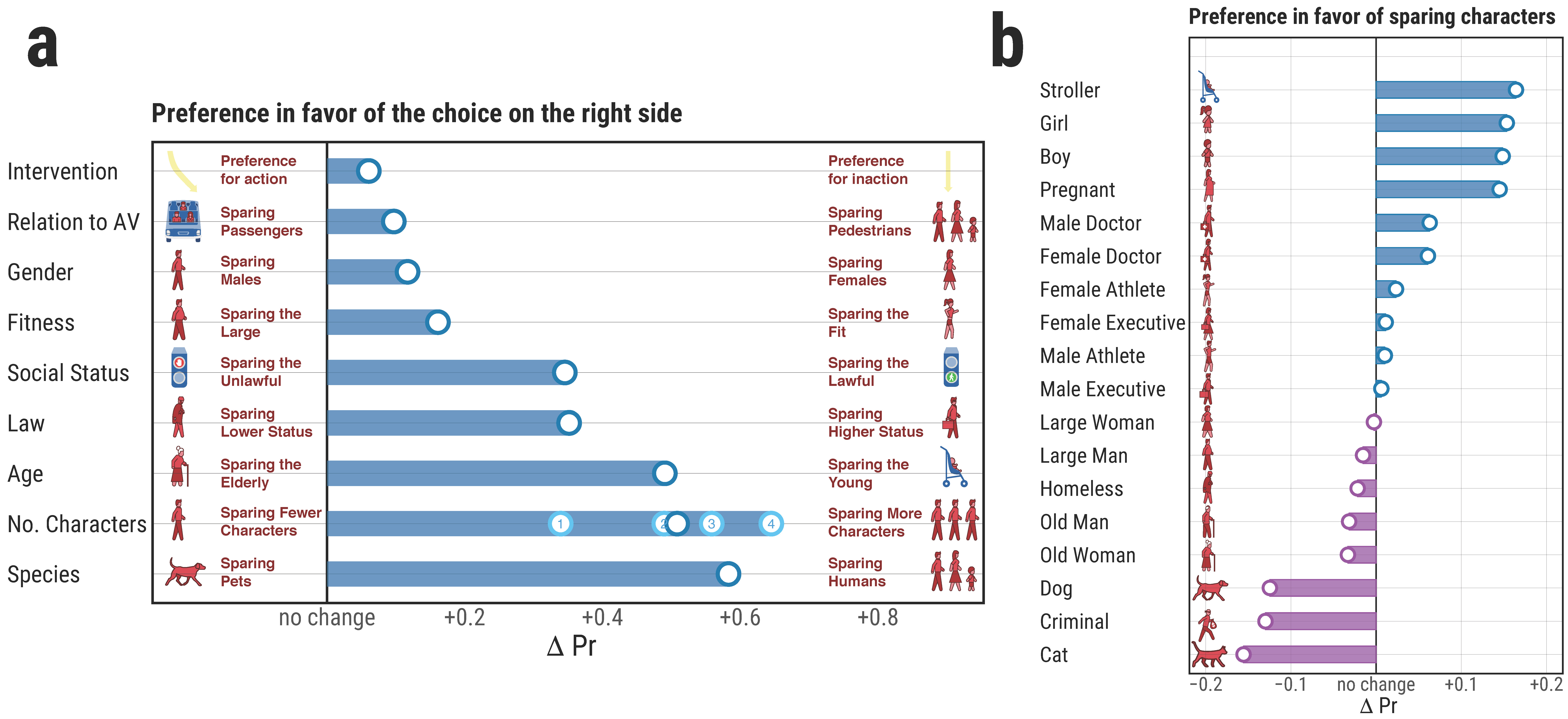

This data identified three main moral criteria: saving the lives of humans over animals, saving the largest number of lives, and saving the lives of the youngest over those of older people. So the profiles saved the most often in the situations proposed by Moral Machine were babies in strollers, children, and pregnant women.

The results also show much more controversial moral preferences: large people have about a 20% higher probability of being killed than fit people; poor people have 40% higher probability of dying than rich people, as do people who ignore traffic signals compared to those who do. The researchers also noted that in developed countries with strong laws and institutions, users save pedestrians who are not crossing at a pedestrian crossing less often than people from less developed countries.

The data gathered is public and accessible to all, so the authors hope that it will be reviewed by governments considering legislation on autonomous vehicles and companies working on programming these vehicles. The goal is not necessarily to follow the wishes of Moral Machine respondents, but to see a global view of people’s moral preferences. The researchers hesitate to say that the study is representative, since participants were volunteers and not selected by sampling methods.

The authors have made available a website where the public can explore the Moral Machine results country by country, and compare countries.

Visit the Moral Machine website : http://moralmachine.mit.edu/

Visit the Moral Machine website presenting the results (embargo password : media) : http://moralmachineresults.scalablecoop.org

© Edmond Awad et al. Nature.

Fig. b : Relative advantage or disadvantage for each category of persons as compared to an adult (male or female). For example, the probability of a child being girl being spared is 0.15 higher that of an adult being spared.

© Edmond Awad et al. Nature.

The Moral Machine Experiment. Edmond Awad, Sohan Dsouza, Richard Kim, Jonathan Schulz, Joseph Henrich, Azim Shariff, Jean-François Bonnefon, Iyad Rahwan. Nature, 2018.